The most remarkable discovery in all of astronomy is that the stars are made of atoms of the same kind as those on the earth.

Richard P. Feynman, American Theoretical Physicist

It’s not often that we happen to pause and ponder the very fundamentals – matter shredded to its finest scale – the elementary particles, that make up everything we are surrounded by, and even ourselves.

As we zoom into the microscopic world, we find a lively kingdom of these particles – a world on its own, subject to spookier and stranger dynamics than what we usually deal with.

We refer to (and study) this world of elementary particles through a branch of physics, called Particle Physics.

Particle Physics might constitute particles that are ‘unimaginably small’, but the vastness of the subject is just the contrary.

So, sit back and relax as we evince this domain thoroughly, dazzling you through the intriguing world of particles.

What Are Elementary Particles?

Since ancient times, we’ve been trying to decipher what makes up the matter that we see and interact with, in our daily lives.

In our quest to find the answers to the same, we inevitably encounter elementary particles, these elementary particles are the building blocks of everything in the Universe.

While they are even smaller than the smallest point you would ever manage to draw, they still bear physical properties such as mass and charge.

It’s these smallest entities that make the biggest difference.

So to better understand them, let’s first revisit their journey and understand how they came to be known as they’re known today.

History of Elementary Particles

Elementary particles, themselves, have come a long way, undergoing heavy evolution under various theories & models.

Elements Before the Christ Era

450 BCs Five Classical Elements

Around 450 BC, the Greeks coined four fundamental elements of nature that everything in the world was supposed to be made up of – earth, fire, air, and water.

Plato, the famous Greek philosopher, was the first one to use the term ‘element’ to refer to air, fire, earth, and water.

He further introduced the idea of a fifth cosmic element, divergent from the existing four elements, under the name ‘aether‘.

Plato thought of this heavenly element as the one that filled this universe, more like space (or void), which were also the names it was later called (for obvious reasons).

However, Plato himself was satisfied with the idea of the four classical elements and chose to adopt the same.

Later, Aristotle, another Greek philosopher who also happened to be Plato’s disciple, emphasized his mentor’s ideas and added the fifth element to the norm (he was, however, not very fond of the term aether).

The ideology of the classical five elements was accepted by almost every other ancient culture.

These classical elements were also adopted by the Indian, Buddhist, and other cultures throughout Asian regions, with a slightly different meaning and name for the fifth element – space or void.

For instance, the void had different interpretations in different cultures. In Greek, void meant empty space while in some other cultures, the void was referred to as a continuous field (just like aether, or ether, to the Greeks).

400 BCs Atomism – Birth of the Atomic Theory

Around the 5th century BC, philosophers asked a more valid question against consenting to the standard norms passed on to generations.

What if we keep dividing an object into halves?

How far can you go, breaking a stone in half, repeatedly? We can keep breaking it infinitely, right? Or can we?

The moment we can’t further divide the stone into smaller pieces is when we arrive at the most fundamental particle – ‘Atom’.

The word originates from the Greek word ‘Atomos’, meaning indivisible.

The theory of atomism was proposed by Greek philosopher Leucippus and his pupil Democritus around 400 BC.

Democritus saw these indivisible entities, which he named ‘atoms’, as the bedrock of not just all matter, but also abstracts like human perception and soul.

He believed that it were these atoms, of numerous shapes, sizes, and structures, that were responsible for everything, from mere objects to our perception & experience.

Meanwhile, at one corner of the earth, an Indian philosopher named Acharya Kanad, initially known by the name Kashyap, also proposed a similar theory.

He defined the smallest indivisible entity as Kan or Parmanu (meaning atom).

At the time (500-300 BC), Atomism still wasn’t completely cherished, and the idea that everything is made of atoms and void was often criticized by other philosophers.

Aristotle denied the existence of void (specifically, the Greek interpretation of void) arguing that it violates physical principles, such as friction during motion.

Aristotle argued that the four classical elements instead of being made up of atoms were continuous and could be subdivided infinitely.

1661’s Corpuscularianism – How Do Little Bodies Transform?

In 1661, Robert Boyle proposed a set of theories referred to as corpuscularianism (that’s indeed a mouthful).

Corpuscularianism devised that interaction between particles could result in the natural transformation of elements in nature.

Robert Boyle claimed that elements could penetrate through each other changing their inner structure and properties.

It was a bit different from the theory of atoms (or atomism), as corpuscles, theoretically, could be divided.

This theory was incorrect because atoms were actually fundamentally indivisible (back then), but it did establish the foundation of chemical reactions.

Classification of Elements into Periodic Tables

18th century onwards, elements saw an evolution in their classification, upon varying grounds, starting with that of the French chemist, Antoine Lavoisier.

1789’s Lavoisier’s Classification of Elements

In 1789, French chemist Antoine Lavoisier observed the physical properties of the known elements, such as rigidity, luster, and malleability, and attempted to classify them based on their metallic or non-metallic nature.

He even categorized light and heat as elements (so he thought) belonging to a category of elastic fluids, while some of the metallic oxides were categorized under the name ‘Earths’.

However, there was no place for elements exhibiting properties similar to both metals and non-metals.

1829’s Döbereiner’s Theory of Triads

In the year 1829, Physicist Johann Wolfgang Döbereiner observed similarities in the properties of elements and categorized them into a set of three that he called triads.

In his theory of triads, the middle element exhibited a mean value of the properties shown by the other two (the properties of the second element were basically the average of those of the first and third).

1864’s Newland’s Law of Octaves

Later, in 1864, scientist John Newlands arranged all known elements into a periodic table based on the increasing order of their atomic mass.

He found that every eight elements had similar properties, analogous to the eighth note being similar to the first note in a musical octave (thus the name), and hence arranged them accordingly.

But, there was no room for elements that were yet to be discovered, which was a drawback of this classification.

1869’s Mendeleev’s Periodic Law

Finally, five years later i.e. in 1869, chemist Dmitri Mendeleev formulated his periodic law.

He revolutionized the process of classifying elements, by correctly predicting the atomic mass and properties of elements (which he, too, arranged in increasing order of atomic mass), while still leaving room for undiscovered elements.

Mendeleev’s Periodic Law was succeeded by Henry Moseley’s Modern Periodic Table.

1913’s Modern Periodic Table

In 1913, English physicist Henry Moseley proposed the idea of classifying elements in increasing order of their atomic numbers (or size) and not mass, setting his periodic law apart from the earlier ones.

He arranged these elements according to the same into a table that came to be known as the Modern Periodic Table, which remains one of the most ‘looked at’ things (don’t you agree with that?), not just in the world of chemistry, but also physics.

Development of the Atomic Structure

To comprehend how these tiny atoms work and interact with each other, it was required to introduce theories that would describe their structure and mechanism.

Early 1800s Dalton’s Atomic Theory

In the 1800s, John Dalton embarked on his interpretation of the atomic theory now called Dalton’s Atomic Theory.

In his theory of the atom, as he understood, he argued –

- All matter is made up of small, identical, and indivisible particles called atoms.

- Atoms can neither be created nor destroyed.

- Atoms of different elements vary in size and mass.

- These atoms rearrange themselves in a chemical reaction to form different substances.

His claim that atoms could neither be created nor destroyed, laid the foundational idea for the law of conservation of mass & energy.

Drawbacks of Dalton’s Atomic Theory

Following later discoveries, it was found that the atom wasn’t the smallest indivisible particle, and this became one of the drawbacks of the model.

Moreover, Dalton’s theory couldn’t incorporate different isotopes of the same element.

But on the brighter side, it constructed the basis of many laws of chemistry that are still true to this date.

Late 1800s Discovery of the Electron

In the late 19th century, a series of cathode-ray experiments were conducted by scientists, which were carefully observed & studied.

In 1897, English physicist J.J. Thomson’s experimental conclusions gave an interesting shape to the atomic structure.

He studied the properties of cathode rays and observed tiny negatively charged subatomic particles, that we now gladly refer to as the electron.

He, hence, became the one to discover the first subatomic particle in history.

Based on his conclusions, he hypothesized a very low-mass particle, with a negative charge, that he called a corpuscle.

He believed that these negatively charged corpuscles swam in a sea of positive charge, which was the foundational idea of his Plum-Pudding Model (which we discuss in the coming section).

It was these corpuscles that, in 1891, went on to be called ‘electrons’.

However, the name ‘electron’ was not new to the world, as it had already been coined by Irish physicist George J. Stoney, for the fundamental unit of charge; 6 years before the cathode ray experiments.

In a nutshell, the discovery of electrons clarified that atoms are not homogeneous but have constituent particles and complex structures.

Protons? Where? When?

Eleven years before, i.e. in 1886, Anode Rays (or Canal Rays) were observed by German scientist Eugen Goldstein in a series of similar experiments, as those performed by Thomson, that confirmed the existence of positively charged particles.

We’ve, herein, not used the term ‘protons’ for a reason, so you better stay tuned for the upcoming section.

Just like that, our understanding of atomic physics transformed, as we began to look at the building blocks of matter (atoms), not as indivisible particles, but as a horizon that held back a forbidden world.

Evolution of Atomic Models – Discovering the Nature of Subatomic Particles

“If, as I have reason to believe, I have disintegrated the nucleus of the atom, this is of greater significance than the war.”

Ernest Rutherford, Physicist (Father of Nuclear Physics)

As the world began to understand and ponder the atomic complexities, physics saw the emergence of atomic models that attempted to closely delineate a better picture of the atom.

Over the years, several atomic models were formulated by various scientists to comprehend what was actually happening in the microworld.

Let’s begin by peeking into the earliest description of the inner structure of an atom, dating back to the start of the 20th century – the century that revolutionized all of physics.

1904s Plum Pudding Model

The Plum Pudding Model was a big step toward the development of atomic models.

It was first formulated by J.J. Thomson, in 1904, to describe the arrangement of negatively & positively charged particles inside the atom.

He hypothesized that the electrons (then corpuscles, as we read earlier) were embedded in a sea of positively charged particles contained in the atom, analogous to plums (or raisins) inside a pudding (hence the name).

He believed that the mass was uniformly distributed throughout, and the magnitude of both positive and negative charges was equal, thus neutralizing the atom overall.

Drawbacks of the Plum Pudding Model

Thomson’s model was quite a foundation, while it was able to explain the overall neutrality of the atom, it couldn’t justify some of the observed phenomena and turned out inconsistent following certain experiments.

His model failed to explain the stability of an atom, as the opposite charges can’t really be peaceful neighbors hugging each other.

It also failed to explain the origin of the spectral lines in hydrogen atoms, as well as matched inconsistent with Rutherford’s alpha-particle scattering experiment (coming up next).

1911’s Rutherford’s Model of the Atom – Discovery of Nucleus

Between 1908 & 1913, physicists Hans Geiger and Ernest Marsden performed a series of experiments that came to be known as the gold foil or alpha-particle scattering experiments.

They bombarded a stream of alpha-particles (or simply, helium nuclei emitted as a ray) onto an extremely thin foil of gold (much thinner than a single human hair) and observed shocking results.

Contradicting the plum pudding model, a whopping percentage of alpha particles passed straight through the atom, meaning most of the atom was empty space.

Some of the alpha particles were deflected by little to large angles while a teeny-tiny fraction of them (1 in almost 20000) rebounded back straight along the direction they came from.

These experiments were further perused and adopted by Sir Ernest Rutherford, in the years that would follow.

The observations hinted at the presence of a positively charged body at the very center of the atom that refrained the alpha particles from passing through (also being responsible for the deflection).

In the year 1911, Rutherford called the positively charged massive (here, ‘massive’ simply means – ‘with a considerable mass’) central body a nucleus, which constituted just a little less than the entire mass of the atom.

In 1920, he proposed that all elemental nuclei contained hydrogen nuclei (or protons) within them.

This was how the world saw the proton as another subatomic particle. You might have noticed that we didn’t use the term ‘protons’ while talking about the anode rays, above in the article.

That’s because anode rays were the discovery of positively charged particles, and not protons essentially (they weren’t called protons, back then).

Anyways, this model, which you just read about, is what we now study as Rutherford’s Atomic Model, which has its own imperfections.

Drawbacks of Rutherford’s Model – Stability & Line Spectrum

Rutherford’s Model, having changed our perspective by a good margin, still seemed to demand further tweaking for its inadequacy in explaining atomic stability.

This model stated that electrons revolve around the positively charged nucleus.

As we know any particle in a circular orbit undergoes acceleration during which, charged particles would radiate energy.

Hence, the revolving electrons will lose energy and eventually fall into the nucleus, rendering the atom non-existent (which is absurd).

This model of the atom also failed to explain the existence of definite lines in the hydrogen spectrum.

According to this model, electrons should emit a continuous energy spectrum but hydrogen atoms possess line spectra that cannot be explained by Rutherford’s model.

It was succeeded by Bohr’s Atomic Model, postulated by Niels Bohr.

1913’s Bohr’s Atomic Model

Danish Physicist Niels Bohr was one of those influential people of the 20th century who laid the foundation of Quantum Mechanics.

Bohr opposed the continuity of electrons inside the atom, as in Rutherford’s model, and postulated that the electrons, instead, revolved around the central nucleus in discrete orbits, each accounting for a fixed energy level.

This way he could plausibly describe the emission spectrum of hydrogen that comprised a series of discrete wavelengths of light.

He also proposed that the light emitted from the atom was in the form of the energy lost by an electron in the process where it made a transition from a higher energy level (say, higher orbit) to a lower one (lower orbit).

Bohr’s Model was, thus, unique as it could justify phenomena that the earlier models couldn’t. However, just like the previous models, Bohr’s wasn’t devoid of imperfections as well.

Drawbacks of Bohr’s Model

Bohr’s Model fell short because it was limited to hydrogen (or hydrogen-like) atoms.

It could also not incorporate De-Broglie’s Hypothesis of the dual nature of matter.

Nonetheless, it was a solid ground for the Physics that would follow.

Boy oh boy, if the 20th century was not the ‘Century of Science’, I don’t know what is!

Anyway, let’s not forget the discovery of the very beloved sibling of the proton; up next.

1932’s Discovery of Neutron

In the year 1932, British physicist James Chadwick noticed some unknown radiation from beryllium passing through paraffin wax.

Particles of this radiation were almost as heavy as protons but, surprisingly, had no charge at all.

This led to the discovery of yet another particle called the neutron, which gained its name from its intrinsic property of possessing a neutral charge.

Our perspective of the inner atomic structure, comprising protons, neutrons, and electrons, has become both widened and clearer with time.

It was around this period when some physicists believed that everything about physics was known to them; isn’t that hilarious?

However, there were, obviously, certain gaps that were required to be filled; the science of the microworld was waiting to be unleashed in its full form.

1900s Enters the Quantum Mechanical Model

Besides all the workaround for atomic models, there had been debates about the true nature of light, which later turned out to be a game-changer for the atomic theory.

In 1672, Newton took the lead, building upon the corpuscular theory introduced by Descartes in 1637, arguing that light behaves as particles.

During the same time, experiments led by scientists Robert Hooke and Christian Huygens introduced a viewpoint of wave that impactfully explained the refraction of light, better than the corpuscular theory could.

Finally, the wave viewpoint got a good stronghold in 1801, as it received support from the discovery of wave interference through experiments led by the scientist Thomas Young, which we now study as the famous Double-Slit Experiment.

The world was convinced of the wave nature of light.

Enters German Physicist Max Planck – the father of quantum mechanics (some students, angsty from quantum mechanics, are not that big a fan of his).

In 1900, Max Planck introduced the idea of discrete quanta, as a solution to the black-body radiation problem, which says that the energy carried by electromagnetic waves is emitted and absorbed in discrete quantities.

He managed to turn the simple understanding of the atomic theory upside down and took it to a whole new level of complexity (or maybe, simplification!), and the rest is a mind-boggling history.

This was the beginning of Quantum Mechanics – a step into a spookier world that, to this date, does not make much sense to many.

The known nature of the innocent world of particle physics was completely altered, as the surrealism of quantum mechanics entered the picture.

“Those who are not shocked when they first come across quantum theory cannot possibly have understood it.”

Neils Bohr, Danish Physicist

1914’s Wave-Particle Duality – Dual Nature of Radiation

Albert Einstein, further, built upon Planck’s idea, he proposed that the disseminated wave of light exists as a bundle of particles, where each particle is like a packet (or ‘quantum’, plural quanta) of energy and named each such particle a ‘photon’.

He introduced the Planck-Einstein Relationship, linking energy and the frequency of light by the famous equation E=h𝜈, to explain the existing experimental data from his famous experiment – The Photoelectric Effect (which also won him the Nobel Prize).

In 1914, Einstein’s hypothesis was confirmed by Robert A. Millikan by highly accurate measurements of Planck’s constant from the photoelectric effect, which earlier remained unexplained by the wave theory of light.

This clashed again with the prevailing wave nature of light and suggested that it shows a particle-like behavior.

Not just ordinary people, but also scientists were torn between the particle and wave nature of light (yes, scientists aren’t ordinary people, are they?).

These were the first signs of wave-particle duality that the world saw.

1924’s Dual Nature of Matter

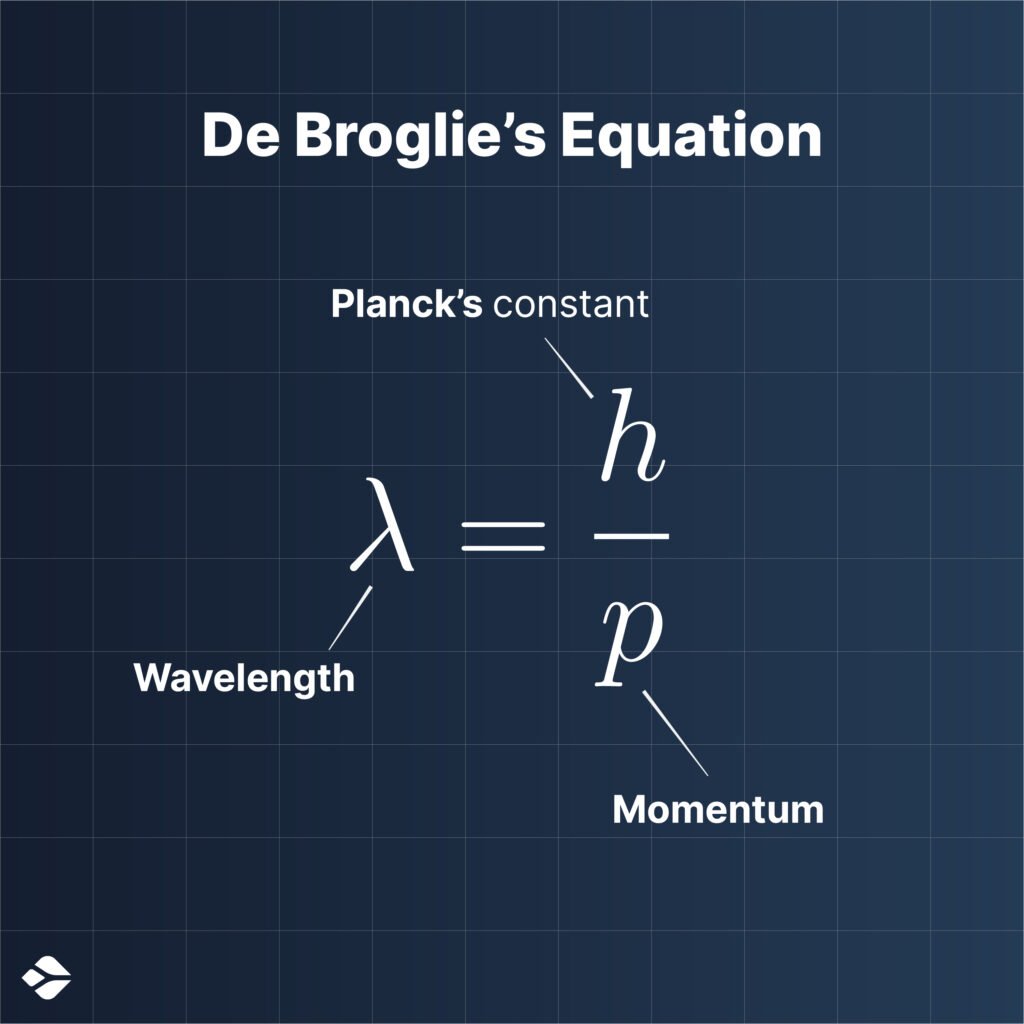

In 1924, French physicist Louis de Broglie entered the battle and hypothesized that not just light, but all particles (matter) showcase this dual behavior (minds blown!), thus concluding Einstein’s original equation, h𝜈0 = m0c2, which further leads us to the famous de-Broglie Equation, λ = h/p.

De-Broglie’s description can be visualized by a particle moving in space as a discrete wave packet.

The de-Broglie Equation established a link between the wavelength of the particle’s matter wave (wave associated with the particle) and the particle’s momentum; where one was inversely proportional to the other.

Later, de Broglie’s hypothesis was confirmed by the cathode ray diffraction and Davison-Germer experiment.

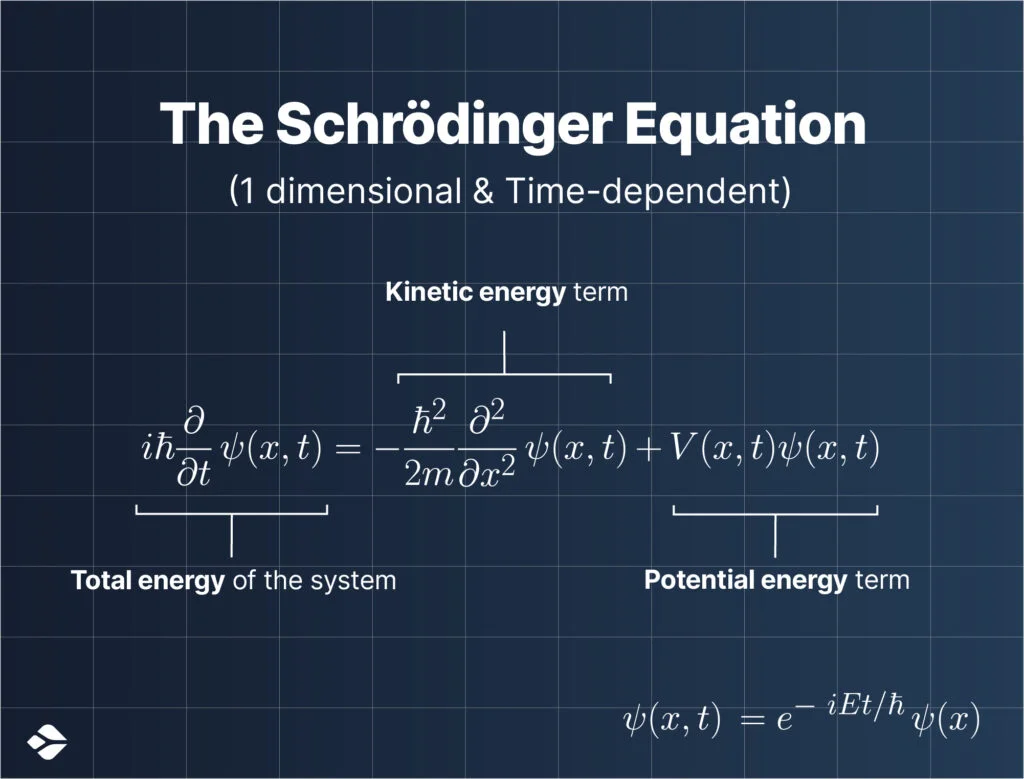

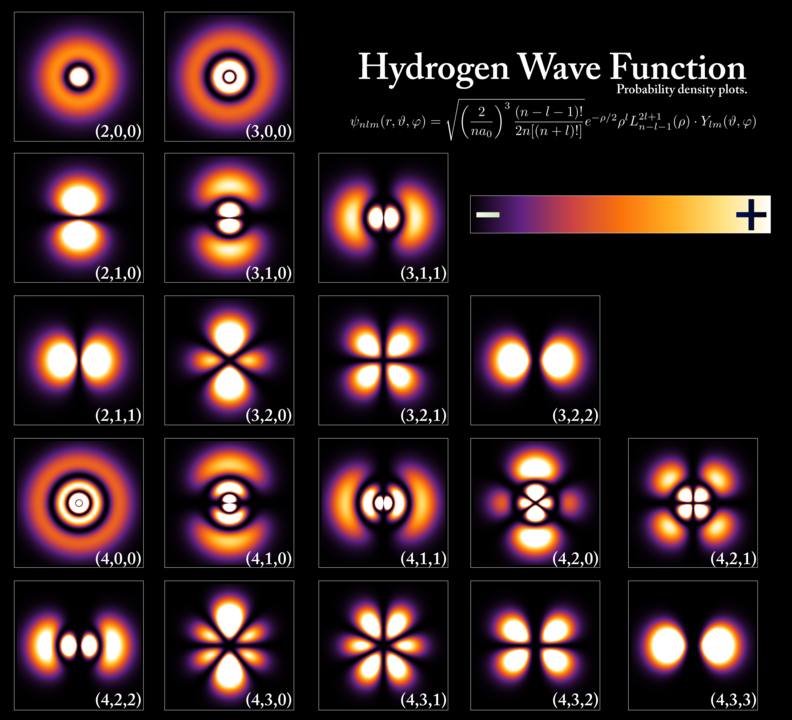

Inspired by de Broglie’s idea, Irish physicist Erwin Schrödinger thought that if all particles behave as waves (also), then there must be some mathematical function (a wavefunction) that would describe them.

1926’s Introduction of the Schrödinger Equation

In 1926, he formulated an equation, now called the Schrödinger Equation, which talked about the details of the wavefunction of a particle, denoted by the symbol psi (Ψ). The true nature of Ψ was still not understood though.

The Schrödinger equation was able to successfully reproduce the efficiency of the previous atomic models.

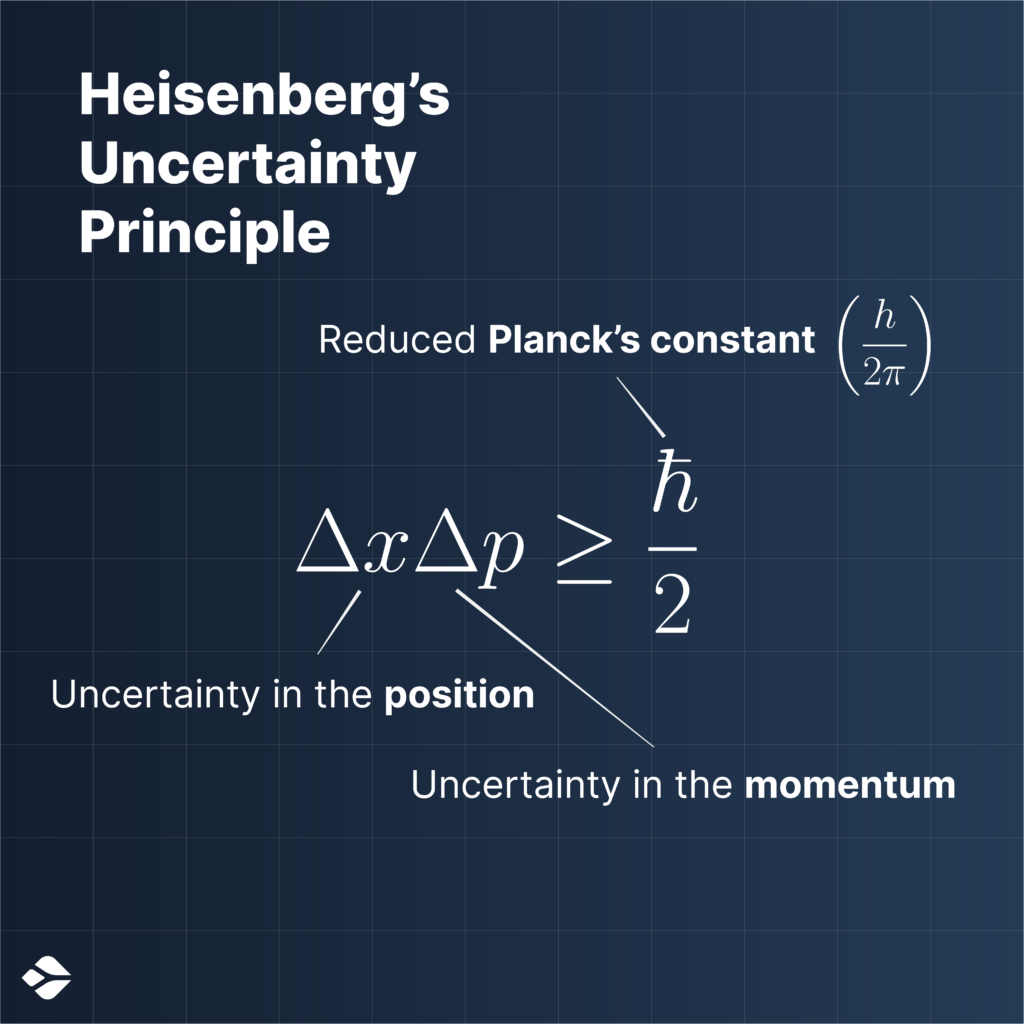

1927’s Heisenberg’s Uncertainty Principle

Later in 1927, Werner Heisenberg introduced his uncertainty principle, which treated an electron’s behavior only based on its observable quantities – the frequencies of light emitted and absorbed by an electron.

In his theory, he never discussed the exact physical extent of an electron’s position or momentum at any time, which wreaked havoc among scientists since the prevailing atomic models consisted of electrons with definite positions and momentum.

After years of debate on the uncertainty principle among scientists (like Heisenberg, Bohr, and Einstein), Heisenberg finally came up with a clear statement about the Uncertainty Principle:

One can never know with perfect accuracy both of those two important factors which determine the movement of one of the smallest particles—its position and its velocity (or momentum). It is impossible to accurately determine both the position and velocity of a particle at the same instant.

Werner Heisenberg, German Theoretical Physicist

The picture of an atom with a positive central nucleus having electrons, aligned neatly in certain orbits, revolving around it was entirely ruined, this paved the way for the Quantum Cloud Model.

Transit to the Quantum Cloud Model

German Physicist Max Born, building upon Heisenberg’s Uncertainty Principle, was able to interpret the wave function psi (Ψ) in the Schrödinger wave equation as follows:

Solving for the wave function through the Schrödinger equation, we can get the probability amplitude for a quantum object (a particle) assigned to each point in space (at each point in time).

In simpler words, the Schrödinger equation could be solved for the wave function of the particle, which in turn was a probability amplitude.

This probability amplitude then could tell us about the possible locations and other required information where one could find that quantum object.

So, it turned out that particles (like an electron) were at all positions simultaneously (in a superposition), but the solution of the wave equation could tell us the probability of finding that particle at the given place.

In this way, an atom can be visualized as a central nucleus surrounded by clouds of electrons called orbitals (not orbits), which represents the probability of finding an electron in that cloud.

1967 Onwards: Standard Model of Particle Physics

Particle physicists have conducted numerous collision experiments with protons, neutrons, and electrons, in supercolliders and observed their elastic scattering.

These experiments discovered many unknown particles such as pions, sigma particles, omega particles, and more.

Ever since, physicists have been trying to better understand Particle Physics by adopting a model that would categorize and explain, both, the elementary particles that we know to date and the fundamental forces of nature (along with the particles associated with these forces).

What we came up with, came to be known as the Standard Model of Elementary Particles – the model that revolutionized particle physics.

It all started when physicist Steven Weinberg, published his paper named a Model of Leptons in 1967.

His three-page-long Nobel Prize (1979) winning paper laid the foundation for the formulation of the Standard Model, while physicists like Sheldon Lee Glashow and Howard Georgi (the former also shared the 1979 Nobel Prize with Weinberg) also made heavy contributions to the same.

Although, the term “Standard Model” was first coined by Abraham Pais and Sam Treiman in 1975.

Looking at this colorful table, we observe that it is divided into two fundamental classes of particles – Fermions (the blue & red ones) and Bosons (the grey ones).

So everything that you know of, either is or is made of one of these two.

It was, once, the atom that was thought to be the most fundamental particle in this world, and now we’re down to fermions and bosons (wow, the evolution!).

What are Fermions?

Fermions are the particles that follow Pauli’s exclusion principle, It means that no two particles can have similar quantum states.

Fermions have a spin quantum number of half-integer values like 1/2, 3/2, 5/2, and so on.

Now the fermions themselves consist of two different kinds of particles – Quarks and Leptons. Studying them both will give us a better idea of the fermions on a general basis.

Meet the Quirky Quarks

It turns out that even subatomic particles like protons are made up of something – but what exactly?

The answer is quarks, they are fundamental particles that are held together by a strong force (refer to Fundamental Forces of Nature in this article, for more clarity), and consequently, make up the hadrons.

Well, the word ‘hadron’ might sound unfamiliar, but it’s something you’ve already read about – two of the most stable hadrons are protons and neutrons.

So it is now self-explanatory that different quarks combine together to form particles we refer to as hadrons.

Types of Quarks

Quarks initially came in three flavors, yeah, flavors (physicists have mastered the art of nomenclature).

These flavors were up, down, and strange; bidding adieu to the Greek letters.

Subsequently, three more (flavors of) quarks were discovered, which are pretty unstable, namely top, bottom, and charm; accounting for a total of 3 pairs of quarks, as we know them today, read more – Types of Quarks.

The Charge on Quarks

Quarks are different from other particles as their charge is not an integral multiple, as the principle of charge quantization asserts.

Instead, their charge is in the form of fractions like 2e/3 (for up quark) and -1e/3 (for down quark), where ‘e’ is the magnitude of the charge of an electron.

Thus, quarks do not follow the charge quantization principle. Out of the six quarks, only two contribute to the structure of protons and neutrons.

Up and Down quarks combine in a group of three with varying compositions, deciding if the particle is a proton or neutron.

Protons consist of two up quarks and one down quark, which gives it a resultant charge of 1e (4e/3 minus 1e/3) to the proton.

In a neutron, one up quark and two down quarks result in a net-zero charge.

Another quite interesting fact is that quarks can’t exist individually, i.e. they can only exist in combined form, and no one has ever observed an independent quark yet.

Now, let’s talk about the other type of fermions – leptons.

What are Leptons?

Leptons are a family of particles that consist of muons, tau, neutrinos, and also the famous – electrons (a very famous lepton indeed).

They are different from quarks as they do not take part in strong interaction and only respond to weak, electromagnetic, and gravitational forces.

Types of Leptons

So far, there have been six leptons, occurring in pairs (just like the quarks).

Neutrino was discovered during the study of β-decay through the weak force (in β-decay, a neutron emits an electron and an antineutrino or a positron and a neutrino).

Neutrinos are also called ‘ghost particles‘ because they are invisible and very difficult to detect, as they cannot be detected by electromagnetism.

These neutrinos come in huge bundles (from stars, supernovae, etc.) racing toward our planet.

Fun Fact: Trillions of neutrinos are passing through your body as you’re reading this.

As for the rest of them, the muon was discovered while studying cosmic radiation coming from space (obviously).

They behaved slightly differently from electrons and other particles, as observed by scientists Carl D. Anderson and Seth Neddermeyer.

Tau is one of the recent discoveries that was theorized independently, and then later, was found experimentally as well.

And, of course, we have already discussed the most famous lepton, electron, haven’t we?

What are Bosons?

Bosons are the latter of the two fundamental classes of particles.

Bosons are basically particles that carry force (a.k.a. force carriers) and unlike fermions, they are exceptions to Pauli’s Exclusion Principle and tend to occupy the same quantum state.

They have a spin quantum number of integer values (0, 1, 2, and so on) and they are the ones that carry the four fundamental forces of nature, which are coming up next.

Fundamental Forces of Nature in the Standard Model

The Standard Model also describes three of the four fundamental forces of nature i.e. Strong Nuclear Force, Weak Nuclear Force, and Electromagnetic Force.

Although Gravity exists as the fourth fundamental force of nature, the Standard Model doesn’t seem to work well with it, at least so far.

Apart from that, gravity has also been portrayed as a (three-dimensional) curvature (or bending of) spacetime, by the General Theory of Relativity, instead of being a force (as in Newtonian Physics); but that is a subject for another article.

For now, the question is:

How do the Fundamental Forces Play?

According to QFT, each of these forces have their own carrier particles (that, as the name suggests, carry these forces) called Bosons, as discussed earlier, which have a spin of 1.

Let’s have a look at the bosons corresponding to each force:

- Strong Nuclear Force is carried out by gluons by the rules of Quantum Chromodynamics (QCD). If it were not for these gluons, nucleons (protons & neutrons) wouldn’t be glued together in the nucleus, despite facing mutual repulsive forces.

- Weak Nuclear Force is carried out by the W and Z Bosons. It plays a vital role in β-decay. The weak force, just like the strong force, is also confined to acting only at a short range of distance.

- The Electromagnetic Force is carried out through photon sharing, as described by Quantum Electrodynamics (QED).

Last but not least, the class of Bosons also comprises a ‘God’ Particle (yes, that is what it is often called) in the Standard Model.

Higgs Boson – The “God Particle”

The origin of the name Higgs Boson goes back to when the American experimental physicist Leon Lederman referred to the particle as the ‘Goddamn Particle‘.

The nickname was a sarcastic depiction of how difficult it was to detect the particle.

Well, it does have a more decent name – Higgs Boson, honoring the British physicist Peter Higgs, who proposed its existence in the 1960s as a solution to a problem in the electroweak theory which unifies the weak force and electromagnetism.

The existence of the Higgs boson was confirmed in 2012 by the Large Hadron Collider (LHC) at CERN, through experiments carried out by the ATLAS and CMS collaborations.

Higgs Boson is the fundamental particle associated with the Higgs field – a field that gives mass to everything that surrounds us.

The Higgs boson itself is quite unstable and decays into other particles almost instantaneously, which is why high-energy particle accelerators like the LHC are required to detect its presence indirectly.

Particles don’t have a mass of their own but ‘get it’ only by interacting with the Higgs Field.

Quantum Field Theory (QFT) suggests that a particle is but a disturbance (or an excitation) in its corresponding field (that the particle is born from a field; each particle has a unique field).

The Higgs Boson, similarly, is a quantized manifestation of the Higgs Field, a field that generates mass by interacting with the fields of other fundamental particles.

Massive particles are massive because they (or their fields) interact more with the Higgs Field, whereas lighter particles are lighter as they interact less.

You guessed it, massless particles don’t interact with the Higgs field at all.

The Higgs Boson is a scalar boson (and the Higgs Field is a scalar field, analogous to temperature, which has no direction and is thus a scalar field of measure of hotness) having a zero spin.

Doesn’t all of that fascinate you enough to study the depths of Quantum Mechanics? Ah Physics, you beauty!

Quantum Field Theory (which happens to be the base of such topics as the Higgs Field) is itself an extremely fascinating domain in physics, but for the sake of relevance, let’s save it for another article.

Trust me, you wouldn’t want to lose yourself in the essence of such a complex topic when you’ve already come so far in wrapping your head around the world of particles.

Antimatter – Most Expensive Substance on Earth

As the name suggests, these are particles that closely resemble the existing matter particles, but there’s a catch: they exhibit just the opposite quantum properties from their corresponding ‘matter’.

For example, an antiparticle for an electron is a positron that has a positive charge (contrary to the electron, which has a negative charge).

If the antimatter would come in contact with its matter particle, they would both annihilate (get destroyed out of existence), leaving behind pure energy.

The existence of antimatter was first predicted by Paul Dirac, in 1928, when he was trying to combine Quantum Mechanics with Special Relativity in the Dirac equation.

He found that there can be two different solutions to the Dirac equation. In one solution, an electron can have a positive charge, and in another, it can have a negative charge.

Antimatter particles can be found naturally in cosmic radiation, and some particles are antiparticles of themselves such as photons, Higgs bosons, and neutrinos.

There is also an Antimatter Factory at CERN (Conseil Européen pour la Recherche Nucléaire, or European Council for Nuclear Research) which manufactures antiparticles such as antiproton, antihydrogen, and antihelium.

Production of antimatter is a very costly and slow process.

1 gram of antihydrogen would cost trillions of dollars and would take thousands of years as they make only one atom at a time.

This makes antimatter the most expensive substance on Earth.

Refer to Baryon Asymmetry in this article to learn about one of the biggest problems in physics related to antimatter.

Features of the Standard Model

The Standard Model is one of the most successful theories in physics.

Tons of physicists have contributed to its development, and it has been rigorously tested in experiments, but every time it passed with flying colors.

It was developed solely using abstract theories and math, but it still managed to describe the nature of reality quite elegantly.

It won’t be unfair to call it a miracle of mathematics; after all, it is the very language of our Universe, and what is not a miracle of mathematics?

There is a reason why more than a quarter of all the Nobel Prizes in Physics were distributed complementing the development of the Standard Model.

The discovery of the Higgs Boson, back in 2012, was one of the most significant accomplishments for particle physicists (if not the most).

It has been over 50 years, but no evidence, yet, has been found to disprove the Standard Model. But, as nature suggests, with merits come a few demerits.

Drawbacks of the Standard Model

The standard model has proved its feat quite well in the global scientific community. However, it can’t be claimed as the most accurate description of the Universe.

It still fails to explain a handful of things; like what, you might wonder. Well, for starters…

Dark Matter Discrepancy

You would be surprised to know (or maybe not; but you gotta admit, it is a surprising fact indeed) that all the matter that we can see or know of, from the smallest toys to the brightest stars, accounts for barely 5 percent of what the universe is composed of.

A gigantic 95% of the universe is made of dark energy (68%) and dark matter (27%).

Physicists have a hard time explaining the behavior of galaxies, and other astrophysical effects and observations, with the existing theories.

They arrived at the need for more matter than we already have, to better explain why things are the way they are at a cosmic scale, hence, hypothesizing dark matter.

The problem lies in the fact that dark matter (as the name suggests) cannot be seen because neither does it seem to interact with light (or electromagnetic waves), nor with fields described in our Standard Model.

The model, thus, fails at incorporating an entity, or a logic (if you will), that would plausibly articulate dark matter, comprehending it for all of us.

Missing Carrier of Gravity

The Standard Model doesn’t comprise the gravitational force in it (something you might have guessed in the section where we talked about carrier particles), which is one of the most popular disputes among particle physicists.

It refuses to work with the theory of General Relativity – the best theory describing gravity as of today.

Every force has its carrier particle (the boson), but there is no such particle for gravity.

Although graviton (one such particle) is hypothesized to be the carrier particle for gravity, it is not consistent with the mathematics of the Standard model.

Rather Massive Neutrino

The mass of the neutrino is yet another conundrum that the Standard Model hasn’t been able to explain.

According to the Standard Model, a neutrino should be massless, but experimental results show that it has a non-zero mass.

While the mass is still minimal, it can’t be ignored.

So, where is the neutrino getting its mass from? Well, that remains a question unanswered.

Baryon Asymmetry

The Standard Model also couldn’t manage to deduce the asymmetry between the matter and antimatter particles as well.

It is thought that the Big Bang must have created an equal amount of matter and antimatter, and while each such matter-antimatter pair must have been annihilated back then, how come we still see (so much) matter around us?

This asymmetry between matter & antimatter, which remains one of the biggest unsolved mysteries in physics, is also known as the baryon asymmetry.

The Standard Model doesn’t explain why matter quantitatively dominates our universe.

What’s Next in the World of Particles?

The Standard Model, as you see, is quite remarkable in its own way. However, it still can’t be regarded as the ultimate theory that describes the nature of reality.

Physicists are trying to formulate a more generalized theory that can possibly fill up the holes left by the Standard Model.

Theories like Loop Quantum Gravity, String Theory, and Supersymmetry are all attempting toward a Grand Unified Theory (A Theory of Everything, as they say), and are some of the best candidates that the physicists are currently working on.

Besides our tremendous feats and knowledge, there is so much that we do not know, and that is what mesmerizes us into the hunger for learning.

Hopefully, someday, we will be able to unmask reality to its cardinal nature, but until then, you get to read such articles, and we get to write them, all posing big questions on the face of the Universe.

“We have found it of paramount importance that in order to progress we must recognize our ignorance and leave room for doubt. Scientific knowledge is a body of statements of varying degrees of certainty – some most unsure, some nearly sure, but none absolutely certain.”

Richard P. Feynman, American Theoretical Physicist

Nobel Prizes in the History of Particle Physics

- Max Planck won the 1918 Nobel Prize in Physics “for proposing the idea of discrete quanta.”

- Albert Einstein was awarded the 1921 Nobel Prize in Physics “for his discovery of the law of the photoelectric effect”

- Neils Bohr received the 1922 Nobel Prize in Physics “for his services towards the evolution of atomic models.”

- Robert Andrews Millikan was awarded the 1923 Nobel Prize “for his work on the elementary charge of electricity and on the photoelectric effect”.

- The 1929 Nobel Prize in Physics was awarded to Louis de Broglie “for his discovery of the wave nature of matter”. De Broglie was the first person to win the Nobel Prize for a Ph.D. thesis.

- Werner Heisenberg received the 1932 Nobel Prize in Physics “for the creation of quantum mechanics that led to the allotropic forms of hydrogen.”

- James Chadwick won the 1935 Nobel Prize in Physics “for his discovery of the neutron.”

- Clinton Davisson and George Paget Thomson shared the 1937 Nobel Prize in Physics “for their experimental discovery of electron diffraction in the famous Davisson–Germer Experiment” (George independently discovered electron diffraction at about the same time as Davisson).

- Max Born won the 1954 Nobel Prize in Physics “for his statistical interpretation of wavefunction” (Walther Bothe also shared the prize that year “for the coincidence method and his discoveries therewith”).

- Steven Weinberg, Sheldon Lee Glashow, and Abdus Salam collectively won the 1979 Nobel Prize in Physics “for their theories on the unified weak and electromagnetic interaction between elementary particles.”

- Peter Higgs and François Englert shared the 2013 Nobel Prize in Physics “for the theoretical discovery of Higgs Boson – a discovery that contributed to our understanding of the origin of mass of subatomic particles”.

Recommendations

- ‘What Are Quarks?‘, Evincism

- ‘Quantum Mechanics Explained: Mathematical Guide for Beginners‘, Evincism

- ‘What is Antimatter – Is Antimatter Real?‘, Evincism

- ‘Odd Muon G-2 Experiment Setbacks the Standard Model of Physics, Evincism

- ‘New Exotic Particles Discovered at CERN by the LHCb, Evincism